Hadoop is a framework which has been developed for organizing and analysing big chunks of data for a business. Suppose you have a file larger than your system’s storage capacity and you can’t store it. Hadoop helps in storing bigger files than what could be stored on one particular server. You can therefore store very, very large files and also more number of large files.

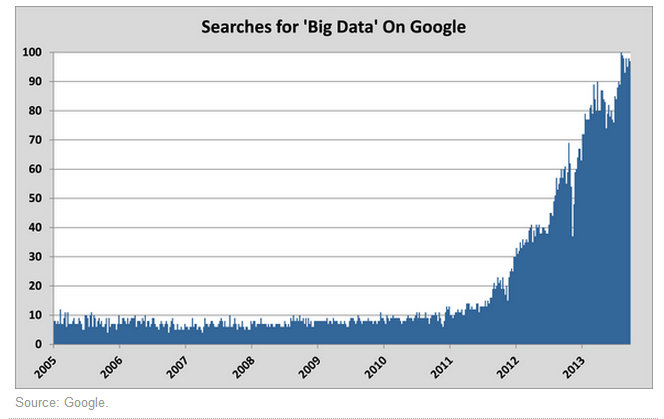

Big Data is another term which is used to signify large amount of data. However, the term “Big Data” today is often used to indicate the technologies which are used to handle large data and Hadoop is one of those technologies (Read our article on Big Data).

Big Data is another term which is used to signify large amount of data. However, the term “Big Data” today is often used to indicate the technologies which are used to handle large data and Hadoop is one of those technologies (Read our article on Big Data).

Importance and Benefits

Hadoop was build to deal with big chunks of complex and structured data.

- Hadoop is used in a number of domains. In financial domains Hadoop helps in risk analysis. In online retail, Hadoop helps businesses in delivering better search answers to their customers so that they buy what is shown to them.

- Hadoop also provides essential features like important predictions, fraud detection, log analysis, image processing wherever several gigabytes, terabytes or even petabytes of data has to be processed.

- A crucial aspect behind the importance of Hadoop is that tasks are often completed in lesser time while cutting down the substantial costs.

- Hadoop also works considerably well with public cloud based frameworks.

Hadoop has now become a single solution for businesses which are in need of faster and stable processing of large, growing data chunks at lesser cost.

Did you know? The New York Times uses Hadoop for converting around 4 million files into PDF in a time of less than 36 hours.

Big Data

Big Data has become an essential need for all major enterprises. Analytical solutions like fraud detection, web advertising, social media analysis and web traffic management involve big volumes of both structured and unstructured data. Implementing Big Data technologies has thus become the need of the hour for every enterprise.

Pre Requisites for Learning Hadoop

There is no specific pre-requisite to learn it but since Hadoop is all about Big Data Analysis and has been built in java, understanding of object oriented programming can definitely be an advantage.

One shall have the basic knowledge of concepts like data-warehousing, parallel and distributed computing, data analysis, web analytics etc.

One shall have the basic knowledge of concepts like data-warehousing, parallel and distributed computing, data analysis, web analytics etc.

Job Opportunities

Hadoop is by far the most used Big Data Technology in the entire world. According to the famous IT research organization named Gartner, Big Data is expected to create more than 4.4 million job opportunities by 2015. Here are some related job roles:

1) Data Statisticians

2) Operations Research Engineers

3) Business Managers

4) Market Research Analysts

5) Financial Analysts

6) Big Data scientists

7) Database administrators

Future

According to another report by Gartner, 64% of surveyed companies indicate that they’re establishing or planning Big Data projects.

The fairly large need of Big Data is likely be in health care, retail and manufacturing creating millions of job opportunities worldwide. However, only one-third of these job positions are estimated to be filled. This is due to the fact that there is a large talent gap for Big Data and skilled the number of professionals skilled in this field is relatively smaller than the requirement.

The future of technology is changing rapidly and Big Data holds a major share in this revolution. If you’re in Bangalore and if you’re interested in learning about Big Data and Hadoop, refer to this page.

If you’re not in Bangalore can post your Hadoop Big Data training requirement here.